Disha Gupta

In corporate learning and development, the effectiveness of training programs stands as a critical determinant of organizational growth and success. This is where training evaluation models come into play, offering structured frameworks to assess the impact, efficiency, and value of training initiatives.

Training evaluation models provide invaluable insights into how well training resonates with learners, influences behavior change, and aligns with business objectives. In this article, we provide an overview of popular training evaluation models, explore their benefits, share real-world applications, and break down best practices for implementing each.

Training evaluation models are systematic frameworks designed to assess the effectiveness, efficiency, and outcomes of training programs within organizations. These models provide a structured approach to measuring the impact of learning and development strategies on learners, as well as their alignment with organizational goals. They help organizations gather data, analyze results, and make informed decisions to optimize training strategies.

Training evaluation models consist of various levels or stages that guide the evaluation process, ranging from assessing participant reactions and learning outcomes to measuring behavior change and business impact. Evaluation models offer valuable frameworks for gauging the effectiveness of training efforts, facilitating continuous improvement, and demonstrating the value of learning initiatives to stakeholders.

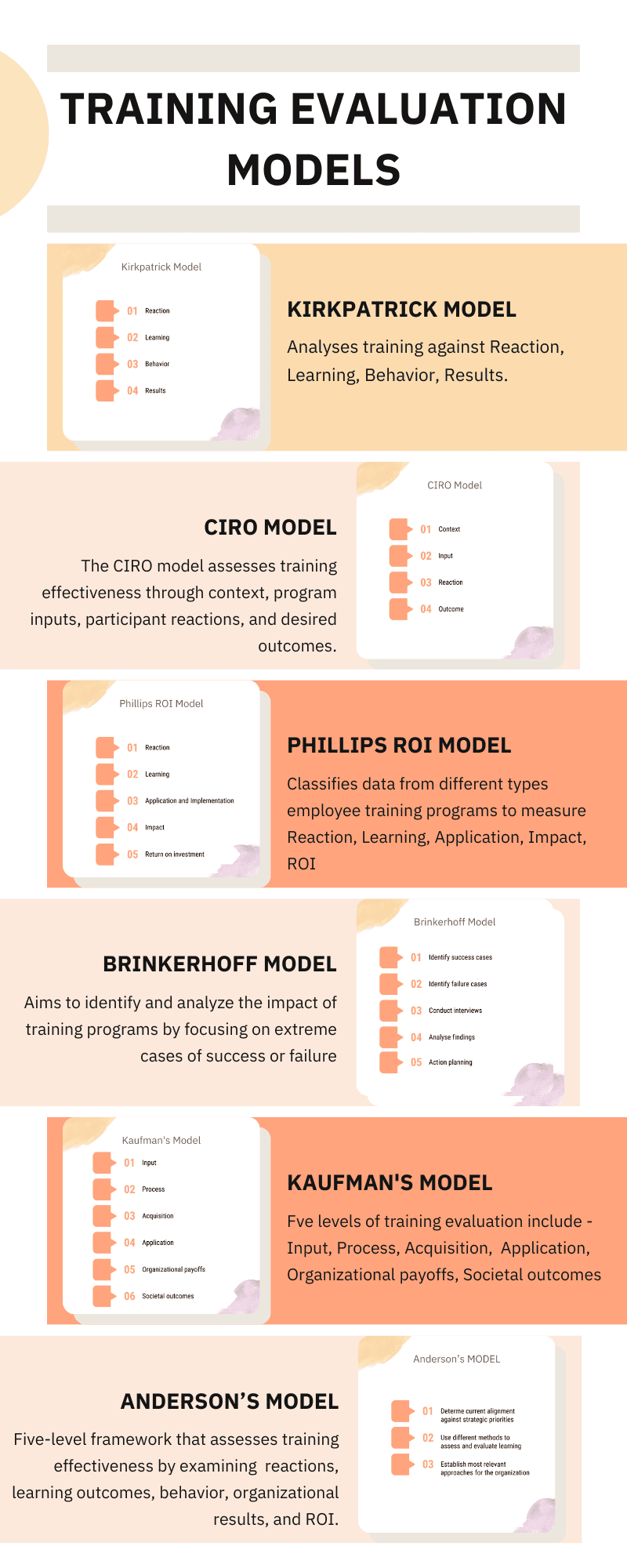

Here are six of the most common training evaluation models.

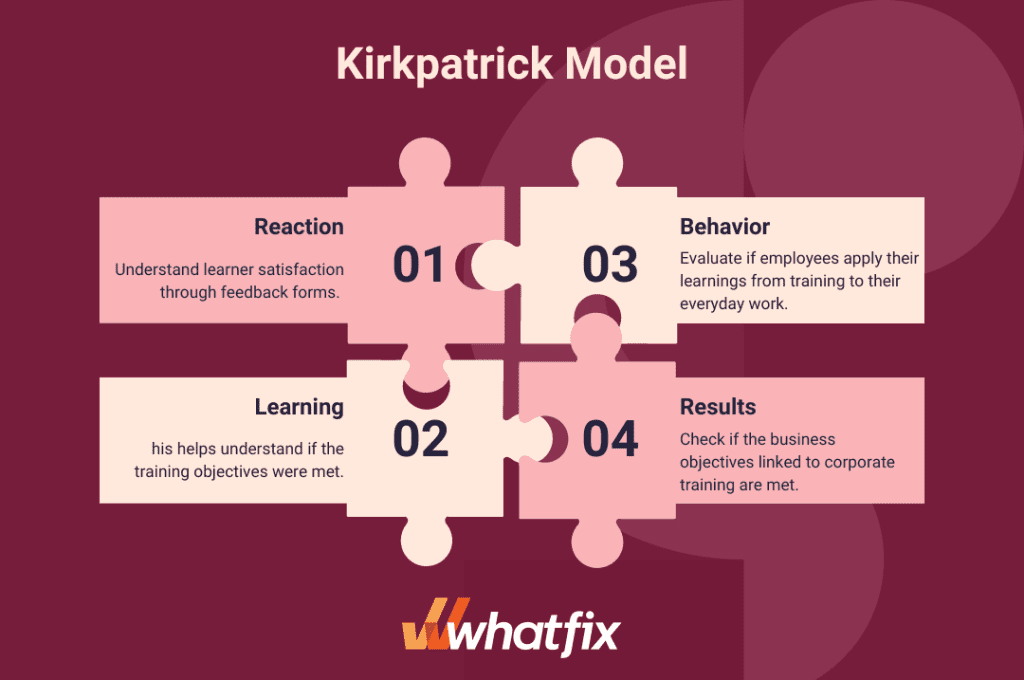

The Kirkpatrick Model of training evaluation is a well known L&D evaluation model fpr analyzing the effectiveness and results of employee training programs. It takes into account the style of training, both informal and formal, and rates them against four levels of criteria, including:

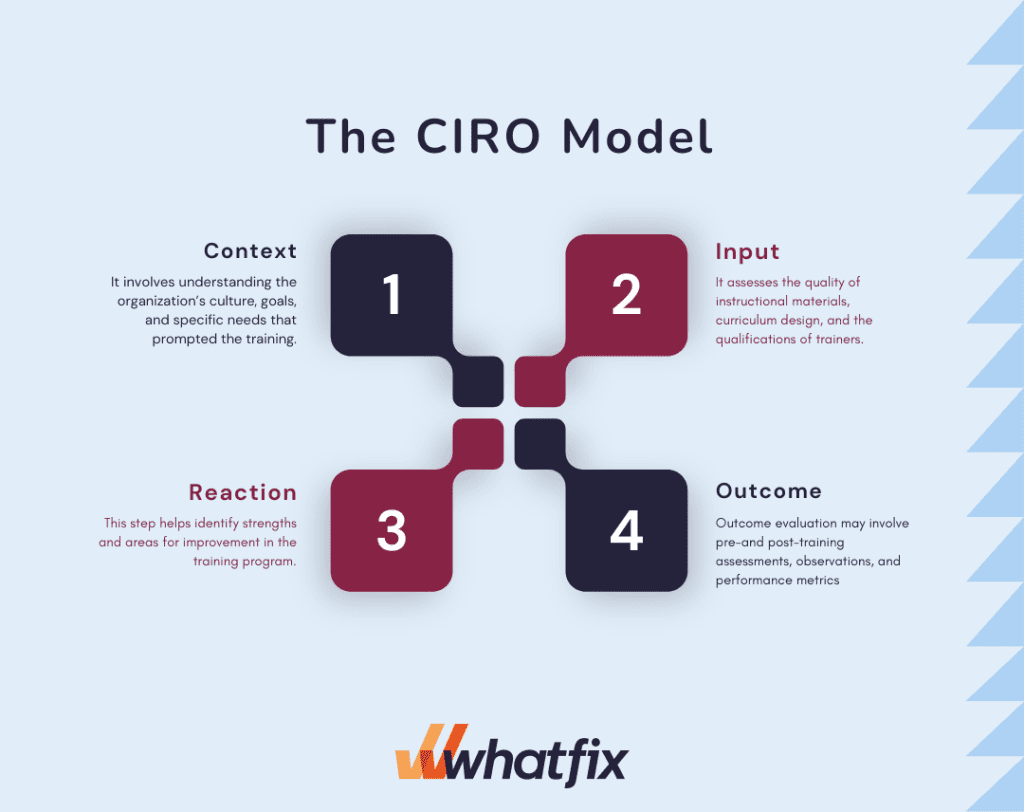

The CIRO model of training evaluation, developed by Peter Warr, Michael Bird, and Neil Rackham, stands for Context, Input, Reaction, and Outcome. This comprehensive framework offers a holistic approach to assessing the effectiveness of training programs. Here’s a breakdown of each component:

The CIRO model emphasizes a comprehensive view of training evaluation by considering the broader context, the quality of training materials, participants’ reactions, and the tangible outcomes of the training program. This approach ensures that training efforts are not only well-designed and engaging but also effectively contribute to improved performance and organizational success.

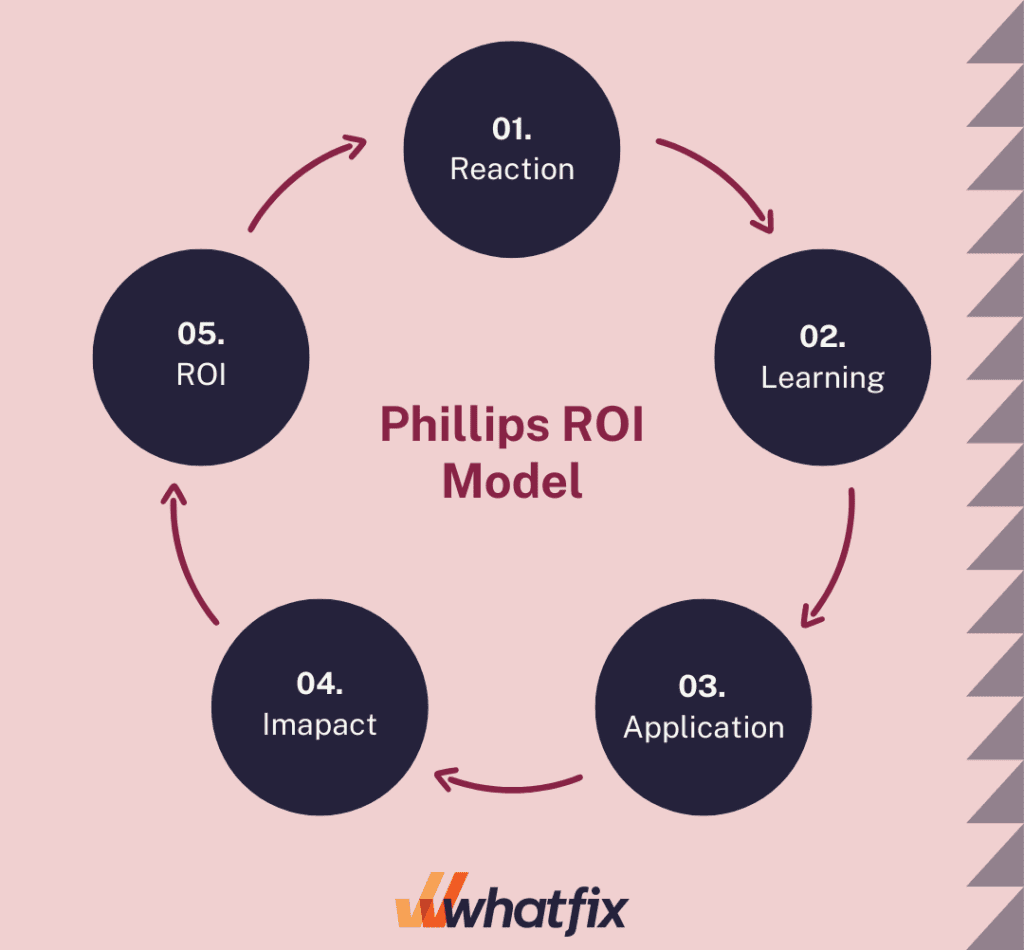

The Phillips ROI Model is a methodology that ties the costs of training programs with the actual results. It builds on the Kirkpatrick Model and classifies data from different types employee training programs to measure:

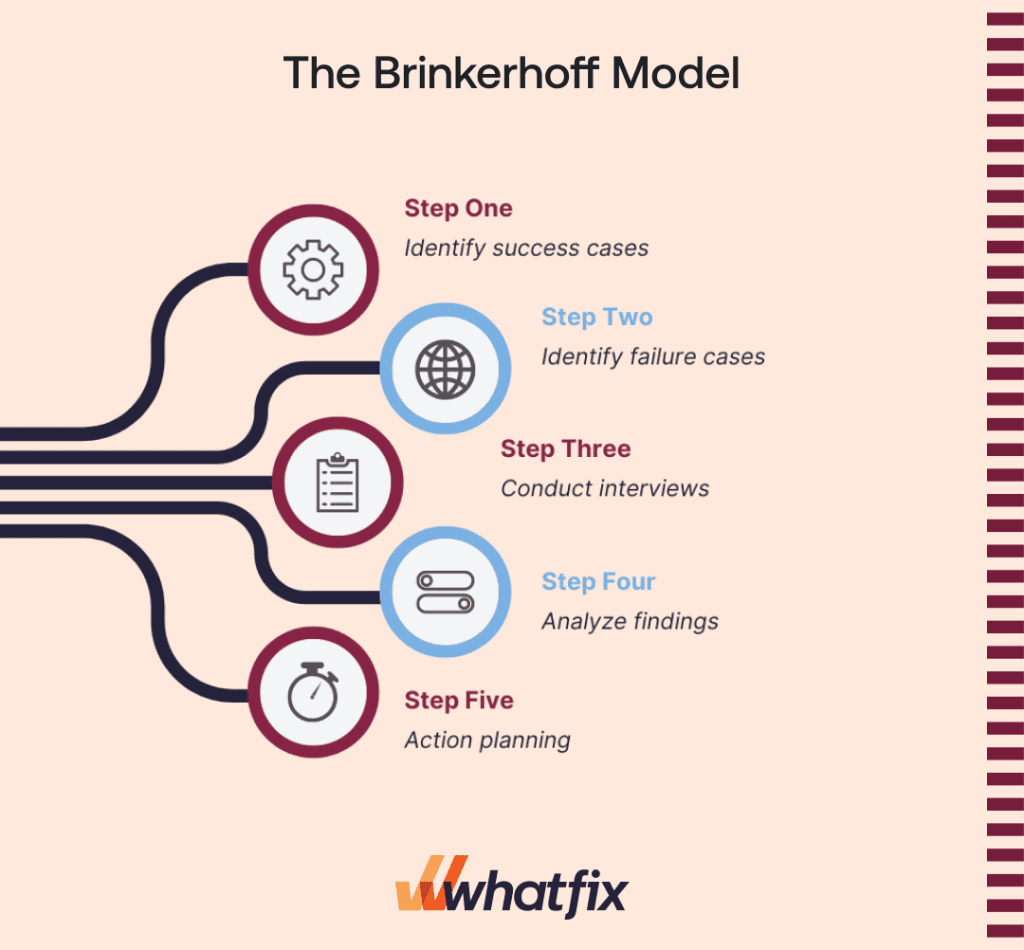

Brinkerhoff’s success case method is a comprehensive training evaluation model developed by Robert O. Brinkerhoff. It aims to identify and analyze the impact of training programs by focusing on extreme cases of success or failure.

Here are the key steps involved in implementing Brinkerhoff’s success case method:

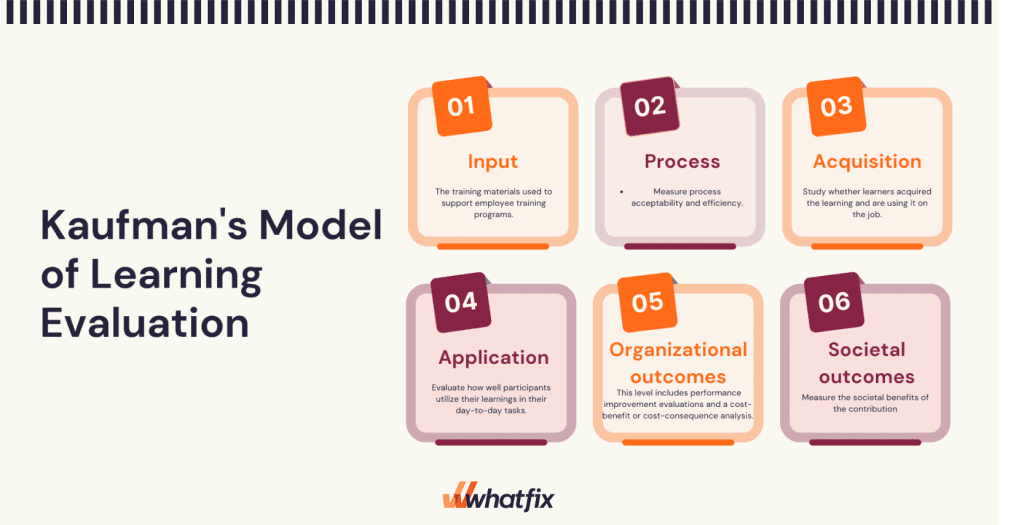

Kaufman’s model is another model built on the foundation of the Kirkpatrick Model. It is a response or reaction to Kirkpatrick’s model that aims to improve upon it in various ways. Kaufman’s five levels of training evaluation include:

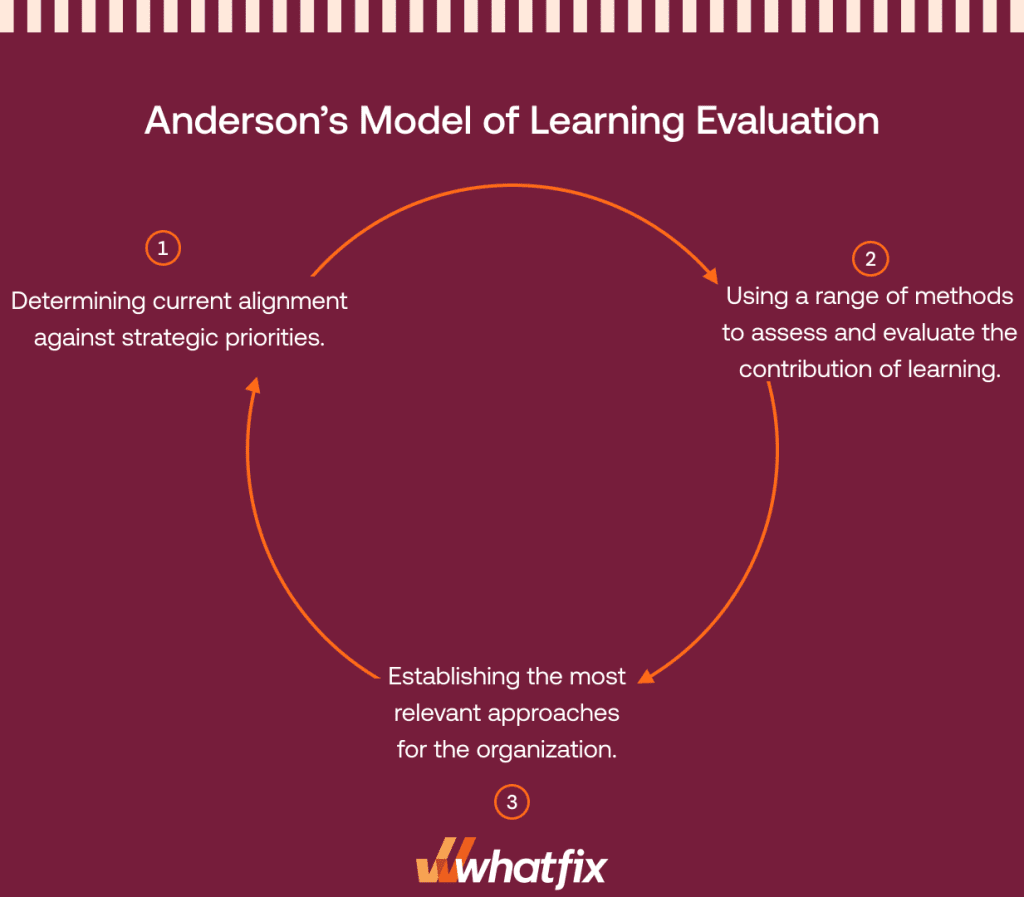

The Anderson learning evaluation model is a three-stage evaluation cycle applied at an organizational level to measure training effectiveness. The model is more concerned with aligning the training goals with the organization’s strategic objectives.

The three cycles of Anderson’s Model include:

Training evaluation models offer a range of benefits that contribute to the overall effectiveness and impact of training initiatives within organizations.

Training evaluation models provide structured methodologies to measure training effectiveness. By assessing participant reactions, learning outcomes, behavior changes, and organizational impact, these models offer a comprehensive view of how well the training aligns with its objectives and contributes to performance improvement.

Evaluation models help L&D teams identify both strengths and weaknesses in their training initiatives. By collecting and analyzing data at various levels, organizations can pinpoint what aspects of the training are working well and where improvements are needed. These insights guide decision-making for refining content, training delivery methods, and overall training strategies.

Through training evaluation models, organizations gain insights into how training content and materials are being received by participants. This information allows them to tailor training content to better match participants’ needs, ensuring that the training remains engaging, relevant, and aligned with the intended learning outcomes.

Effective training evaluation models enable organizations to assess the return on investment (ROI) of their training initiatives. By measuring the impact of training on job performance, productivity, and organizational outcomes, organizations can make informed decisions about the allocation of resources and ensure that training efforts are delivering measurable value. Consider the 70-20-10 model as a learning framework to further increase training ROI.

Training evaluation models encourage accountability at various levels. Participants are held accountable for their engagement and application of training content, while trainers and instructional designers are accountable for the effectiveness of their materials. Additionally, organizations can use evaluation results to hold stakeholders accountable for the success of training initiatives within the broader context of business goals.

Implementing training evaluation models can present several challenges that organizations need to navigate effectively to ensure the success and value of their training initiatives.

One of the primary challenges is the allocation of sufficient resources, including time, budget, and personnel, for the evaluation process. Organizations might find it difficult to dedicate resources to data collection, analysis, and reporting, especially when facing tight deadlines and competing priorities. Balancing the need for thorough evaluation with limited resources requires careful planning and prioritization.

Gaining buy-in from stakeholders, including leadership, trainers, participants, and decision-makers, can be challenging. Some stakeholders may view evaluation as time-consuming or unnecessary, particularly if they don’t fully understand the value it brings. Effective communication about the benefits of evaluation and its role in improving training outcomes is crucial for overcoming this challenge.

Many training evaluation models involve multiple levels of assessment, data collection methods, and analysis techniques. The complexity of these models can be overwhelming, especially for organizations new to the evaluation process. Striking a balance between a comprehensive evaluation approach and one that is manageable and aligned with organizational needs is a challenge that organizations must address.

Implementing training evaluation models requires careful planning and execution to ensure accurate and meaningful results. Here are some best practices to consider:

Ensure that your evaluation aligns with the specific goals and objectives of the training program. Each level of the evaluation should reflect the intended outcomes of the training, from knowledge acquisition to behavior change and organizational impact.

Engage key stakeholders, including trainers, participants, and organizational leaders, while designing the evaluation process. Their input ensures that the evaluation model captures relevant aspects of the training and addresses their expectations.

Design data collection methods that are both reliable and appropriate for each evaluation level. This includes pre- and post-training surveys, assessments, interviews, observations, and performance metrics. Ensuring data accuracy and consistency is crucial for meaningful analysis.

Before implementing the training, establish baseline measures to assess the initial state of participants’ knowledge, skills, and behaviors. This baseline serves as a reference point for evaluating the impact of the training and identifying improvements.

Create a safe and non-judgmental environment that encourages participants to provide honest feedback about the training. Anonymous surveys or focus groups enable participants to share their thoughts openly, providing valuable insights for improvement.

Share the evaluation results with stakeholders in a clear and transparent manner. Highlight successes and areas for improvement, and show how the data aligns with the training objectives. Effective communication builds trust and demonstrates the value of evaluation efforts.

Treat training evaluation as an ongoing process of improvement. Regularly review the evaluation methods, incorporate feedback, and refine the process to enhance its effectiveness over time.

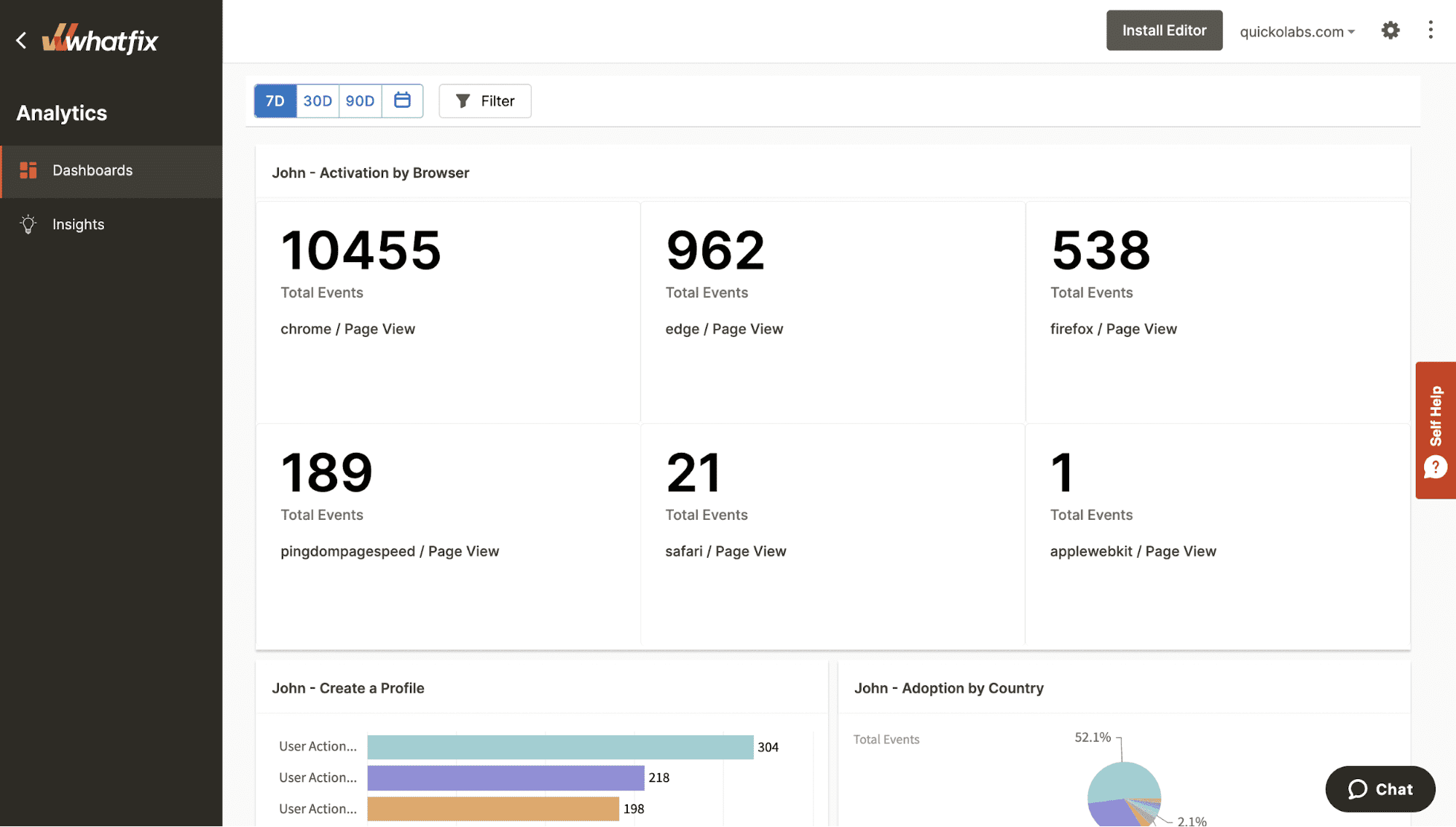

Implementing a digital adoption platform such as Whatfix can significantly contribute to evaluating your training programs by providing insights into learners’ actual usage and proficiency with digital tools and processes.

These platforms offer real-time analytics that tracks employees’ interactions with software applications and digital workflows, capturing data on their actions, completion rates, and task efficiency.

By analyzing this data, L&D professionals can evaluate learners’ adoption and application of the training content in real-world scenarios.

With the ability to monitor user engagement, performance, and feedback, DAPs offer a comprehensive view of training outcomes, enabling organizations to assess their training initiatives’ effectiveness, identify improvement areas, and make data-driven decisions to optimize the learning experience and maximize digital adoption success.

Software clicks better with Whatfix's digital adoption platform

Enable your employees with in-app guidance, self-help support, process changes alerts, pop-ups for department announcements, and field validations to improve data accuracy.

Thank you for subscribing!