Organizations invest heavily in learning and development programs to help employees develop new skills, drive performance, and achieve strategic goals. However, a critical question often arises: how can L&D teams measure training effectiveness?

One solution to evaluating training success is the Kirkpatrick Model.

Traditional training evaluation methods often focus on participant feedback or test scores, overlooking the broader impact on workplace behavior and business outcomes. The Kirkpatrick Model addresses this gap by providing a comprehensive, four-level framework to assess training effectiveness.

It evaluates immediate reactions and learning outcomes and examines how employees apply their knowledge on the job and the resulting impact on organizational success. By using this model, organizations can ensure their training initiatives deliver measurable value, foster continuous improvement, and align with long-term business objectives.

In this article, we’ll explore the Kirkpatrick Model of training evaluation, break down its four levels, and address its criticisms.

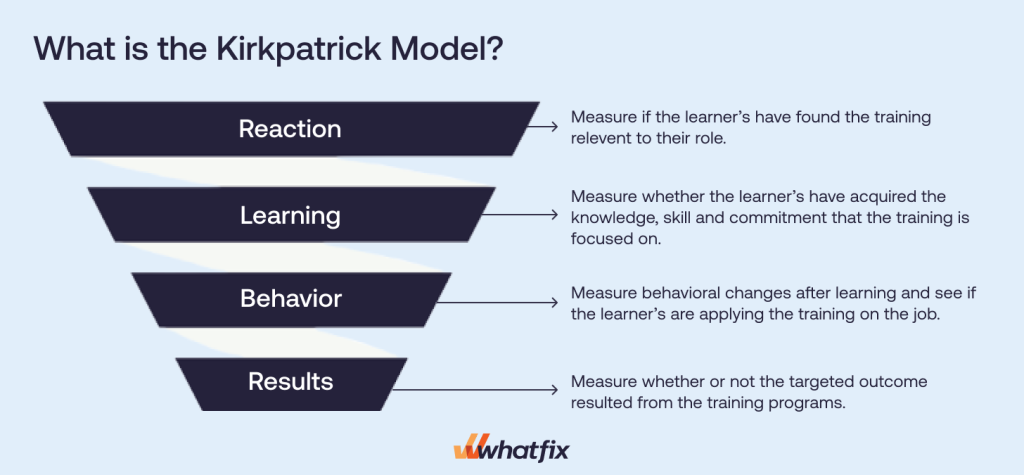

What Is the Kirkpatrick Model?

The Kirkpatrick Model is a common training evaluation model for analyzing and evaluating the effectiveness and results of employee training programs. It takes into account the style of training, both informal and formal, and rates them against the following four criteria levels:

- Reaction of the learner and their thoughts on the training program.

- Learning and increase in knowledge from the training program.

- Behavior change and improvement after applying the learnings on the job.

- Results that the learner’s performance has on the business.

Understanding the Four Levels of the Kirkpatrick Model

Each level of the Kirkpatrick Model focuses on different objectives and outcomes for learners. Here is an in-depth explanation of all four levels of the Kirkpatrick Model.

Level 1: Reaction

The first level focuses on how participants respond to the training. There are three parts to the reaction phase:

- Satisfaction: Are the learners happy with what they learned in the training?

- Engagement: How much did the learner engage and contribute to the learning experience?

- Relevance: How much of the information acquired will employees be able to apply in their jobs?

This stage ensures that the training methods, learning environment, instructional content, and training delivery meet the participants’ expectations and create a positive learning atmosphere.

Best practices for the reaction stage

- Use well-designed feedback surveys with specific, actionable questions focusing on program objectives, training materials, content relevance, facilitator knowledge, etc.

- Assess relevance, trainer effectiveness, materials, and overall satisfaction.

- Keep surveys concise to encourage completion and honest responses.

- Include open-ended questions for qualitative insights.

- Administer feedback forms immediately after the session to capture fresh impressions.

Example

A small sales company rolled out a new CRM platform for sales reps to drive sales productivity and eliminate manual tasks. To get the maximum return on their investment, the company must provide CRM training to sales reps so that they can use the platform to its full potential.

The company hires a trainer to host the first one-hour CRM training session. The session’s objective is to teach the sales reps how to create opportunities in CRM and start migrating from spreadsheets to the new CRM. The instructor puts in their best effort to provide a comprehensive training experience for the learners.

But how does the company know how well the employees engaged with the training session?

By the end of the training, employees are given an online survey to rate, on a scale of 1 to 5, how relevant the training was to their jobs, how engaging it was, and how satisfied they are with the knowledge they have received.

There’s also a question about whether they would recommend the training to a colleague and whether they’re confident they can use the new CRM tool for sales activities.

In this example, efforts are made to collect data about how the participants initially react to the training. The collected data is then used to address concerns about the training experience and provide a much better experience to the participants in the future.

Level 2: Learning

This level evaluates the extent to which participants have acquired the intended knowledge, skills, and attitudes. It measures learning outcomes through tests, quizzes, or practical exercises conducted before and after the training.

Best practices for the learning stage

- Use pre and post-training feedback assessments to measure knowledge or skill improvement.

- Include both theoretical and practical evaluations to ensure well-rounded learning.

- Align assessments with clearly defined training objectives.

- Provide immediate feedback on tests or exercises to reinforce learning.

Example

Carrying forward the example from the previous section, let’s see what an example of the level 2 evaluation would look like.

The objective of the training session is to teach employees how to create opportunities in their new CRM and facilitate a smooth transition away from spreadsheets, improving an organization’s sales data quality. For this, a training program is implemented for 20 sales reps to learn the opportunity creation process.

To determine if the learners gained the intended knowledge from the CRM training session, the trainer asks them to demonstrate creating a new opportunity on the CRM platform.

This helps the instructor understand whether the sales reps are ready for the next training session. The trainers may also deliver a short multiple-choice assessment before and after the training session to compare the knowledge acquired by learners from the training.

Level 3: Behavior

The behavior phase is one of the most crucial steps in the Kirkpatrick Model. It measures whether the learning truly impacted participants and whether they applied it to their daily tasks.

After completing the program, this level analyzes the differences in the participants’ work behavior. This helps determine whether the knowledge and skills the program taught are being used in the workplace and what impact they have had.

Testing behavior is challenging as it is generally difficult to anticipate when a trainee will start to utilize their learnings properly. This makes it more challenging to determine when, how often, and how to evaluate a participant post-assessment.

Best practices for the behavior stage

- Observe participants’ performance on the job over time to identify behavioral changes.

- Collect feedback from managers, colleagues, and customers to validate observations.

- Provide follow-up sessions, coaching, or mentoring to reinforce desired behaviors.

- Create a supportive work environment that encourages the application of new skills.

Example

To observe the behavior level in our previous CRM training example, you must keep measuring the CRM adoption metrics that matter to your organization via end-user feedback loops and data analytics. Pull the win rates and sales performance KPIs and observe the time taken to create an opportunity for all the training participants after 3 to 6 months of completing the training session to examine any behavior changes.

Compare each trainee’s metrics to observe which have adopted the new CRM and are using it in their daily tasks. Additionally, use your employee analytics to gather insights into which employees are engaging with the training and implementing their learnings on the job and which are underutilizing the tool.

Level 4: Results

The results level of the Kirkpatrick Model, commonly regarded as the program’s primary goal, is dedicated to measuring the overall success of a training program. It measures learning against the organization’s business outcomes (i.e. your KPIs established at the start of the training.)

Common KPIs include:

- A boost in sales

- Lowered spending

- Improved quality of products

- Fewer accidents in the workplace

- Increased productivity

- Increased customer satisfaction

Best practices for the results stage

- Define specific, measurable KPIs aligned with training objectives.

- Use a mix of quantitative (e.g., sales growth) and qualitative (e.g., employee morale) data to evaluate impact.

- Correlate training outcomes with business results while considering external factors.

- Conduct long-term assessments to capture sustained benefits.

Example

In our CRM implementation example, the primary metrics the training evaluators examine are opportunity creation in the CRM and data migration from old spreadsheets.

To measure results, observe whether new opportunities are being created on the CRM platform or the old spreadsheets. If all 20 participants are now creating opportunities on the CRM tool and saving time, the training program was effective and contributed to the sales team’s success and overall organization.

On the other hand, if some of the participants still use spreadsheets, the training wasn’t as useful for them. In this case, L&D teams can either arrange a different training method for these employees or figure out if there is any other reason behind their resistance to change and work accordingly.

Benefits of the Kirkpatrick Model

Here are some significant benefits of implementing the Kirkpatrick Model of training evaluation.

- Comprehensive evaluation: The model assesses training effectiveness across multiple dimensions like participant satisfaction, knowledge acquisition, behavior changes, and organizational impact. This ensures a holistic understanding of training outcomes.

- Clear success metrics: Each level provides specific, measurable criteria to evaluate success, making it easier to identify strengths and areas for improvement in training programs.

- Focus on business impact and results-based outcomes: By linking training outcomes to organizational goals, the model emphasizes tangible results, such as improved productivity, customer satisfaction, or revenue growth.

- Promotes continuous improvement: Feedback collected at each level informs adjustments to training design and delivery, fostering an iterative process for ongoing enhancement.

- Encourages accountability: The model highlights the shared responsibility of trainers, managers, and participants in ensuring training success, particularly at the behavior and results levels.

- Customizable and scalable: It can be tailored to suit various industries, training formats, and organizational sizes, making it adaptable to diverse learning environments.

- Enhances stakeholder buy-in: Demonstrating the measurable value of training programs builds trust and support from stakeholders, including leadership and decision-makers.

Criticisms of the Kirkpatrick Model

Here are some of the challenges faced while implementing the Kirkpatrick Model:

- Resource-intensive: Implementing the model, especially at Levels 3 (Behavior) and 4 (Results), requires significant time, effort, and resources to gather and analyze data effectively.

- Difficulties in isolating the effect on business results: Attributing improvements in business outcomes solely to training can be challenging, as other factors, such as market conditions or organizational changes, may also contribute.

- Subjectivity in some feedback methods: Feedback collected at Level 1 (Reaction) and Level 3 (Behavior) can be subjective, relying heavily on participant and manager perceptions, which may not always reflect true training effectiveness.

Best Practices for Implementing the Kirkpatrick Model

Here are a few best practices for implementing the Kirkpatrick Model of training evaluation.

- Start with clear learning and business objectives: Define specific learning outcomes and align them with broader business goals to ensure the training effectively addresses organizational needs.

- Plan evaluation methods from the beginning: Design evaluation strategies during the training development phase, ensuring you have tools and processes in place to measure success at each level.

- Leverage technology for efficiency: Modern L&D technologies like learning management systems and digital adoption platforms provide interactive learning experiences for employees and often automate data collection and analysis of these training programs. Go further by implementing a tool like Whatfix Product Analytics to empower application owners with a no-code event-tracking solution to analyze user behavior on internal software to identify process adoption, task time-to-completion, workflow governance, and more.

- Collect actionable data: Gather both quantitative (e.g., test scores and performance metrics) and qualitative (e.g., feedback forms) data to identify trends and drive improvements in training.

- Communicate results effectively: Tailor reports to different stakeholders, highlighting key findings and the training program’s impact on business objectives to ensure continued support and alignment.

- Use control groups: Compare trained and untrained groups to isolate the impact of training on performance.

- Streamline processes: Focus on the most critical levels for evaluation based on training goals and available resources.

Training Clicks Better With Whatfix

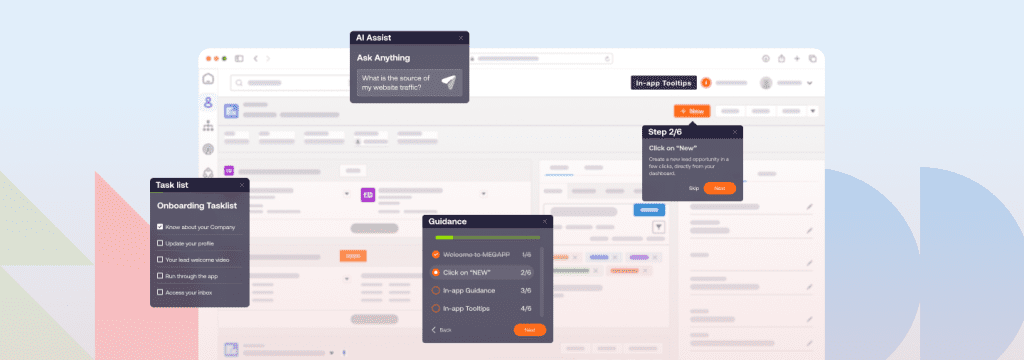

Whatfix redefines employee training by seamlessly integrating learning into daily workflows, making it a natural part of the job. With its suite of innovative tools, such as Whatfix Mirror, Whatfix DAP, and Product Analytics, organizations can create training programs that truly “click” with employees.

Whatfix Mirror revolutionizes simulation-based learning by:

- Easily creating replica sandbox environments of all your enterprise applications.

- Accelerating time-to-proficiency for new hires with hands-on experiences in a risk-free simulation environment that reduces the chance of costly errors.

Whatfix DAP evolves training from static, one-size-fits-all programs to dynamic, contextual guidance by:

- Creating contextual in-app guidance and on-screen overlays that support employees on different tasks.

- Providing guidance to improve process governance and reduce time-to-completion of core tasks.

- Providing constant support for employees within the enterprise applications by integrating knowledge repositories and SOPs with self help.

- Providing smart tips and interactive walkthroughs for employees to complete complex processes.

- Collecting end-user feedback to create feedback loops that improve your in-app guidance and training programs.

Whatfix Product Analytics helps measure training effectiveness by:

- Providing a no-code event-tracking solution to analyze user behavior on internal software and customer-facing apps.

- Identifying friction points and inefficiencies in workflows to optimize processes and improve product experiences.

To learn more about Whatfix, schedule a free demo with us today!